Innovative Collaboration for Enhanced AI Capabilities

MediaTek, a renowned chip manufacturer, has unveiled a groundbreaking partnership with Meta’s Llama 2, an open-source Large Language Model (LLM). This collaboration aims to revolutionize on-device generative AI capabilities in edge devices. By harnessing the potential of Meta’s Llama 2 along with MediaTek’s cutting-edge AI Processing Units (APUs) and NeuroPilot AI Platform, a comprehensive edge computing ecosystem is in the making. The vision is to expedite the development of AI applications across a spectrum of devices, ranging from smartphones and IoT devices to vehicles and smart homes.

Empowering On-Device Processing

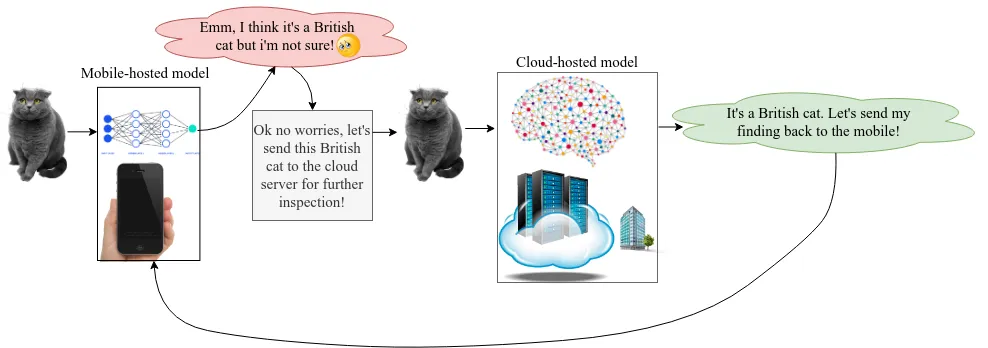

Diverging from traditional approaches that heavily rely on cloud computing for generative AI processing, MediaTek’s strategic utilization of Llama 2 models empowers the execution of generative AI applications directly on-device. This transformative approach offers a multitude of advantages to both developers and users. The benefits include elevated performance, heightened privacy, fortified security and reliability, decreased latency, the ability to function seamlessly in low-connectivity regions, and cost savings.

JC Hsu, Corporate Senior Vice President and General Manager of the Wireless Communications Business Unit at MediaTek, accentuated the escalating significance of generative AI in the realm of digital transformation. Hsu highlighted MediaTek’s unwavering commitment to equipping Llama 2 developers and users with the essential tools to drive innovation within the AI domain.

The Key to Success: High-Computing, Low-Power Processors

For manufacturers of edge devices to fully capitalize on the potential of on-device generative AI technology, the requisites include high-computing, low-power AI processors, and robust connectivity. MediaTek’s 5G smartphone SoCs (System-on-Chips) are already embedded with APUs engineered to facilitate an array of generative AI features such as AI Noise Reduction, AI Super Resolution, and AI MEMC.

Anticipating the Future: Upcoming Flagship Chipset

Notably, MediaTek’s imminent flagship chipset, slated for introduction later this year, is poised to revolutionize the landscape. The chipset will encompass an optimized software stack designed to seamlessly integrate with Llama 2. This integration will be accompanied by an upgraded APU bolstered by Transformer backbone acceleration, amplified access to DRAM bandwidth, and a compact footprint. This enhancement is projected to significantly amplify the performance of both LLM and AI-generated content (AIGC).

Paving the Path Forward

MediaTek envisions the accessibility of Llama 2-based AI applications on smartphones powered by the forthcoming flagship SoC, scheduled for release by year-end. This collaborative endeavor underscores the ongoing evolution in on-device AI processing and accentuates the mounting prominence of edge computing in the landscape of AI application development. As the collaboration between MediaTek and Meta’s Llama 2 takes center stage, the trajectory of AI innovation is poised for a paradigm shift.

Also Read: Meta’s Threads App Faces Criticism Over Privacy Policy

Gangtokian Web Team, 25/08/23